September 4, 2019 feature

A method to introduce emotion recognition in gaming

Virtual Reality (VR) is opening up exciting new frontiers in the development of video games, paving the way for increasingly realistic, interactive and immersive gaming experiences. VR consoles, in fact, allow gamers to feel like they are almost inside the game, overcoming limitations associated with display resolution and latency issues.

An interesting further integration for VR would be emotion recognition, as this could enable the development of games that respond to a user's emotions in real time. With this in mind, a team of researchers at Yonsei University and Motion Device Inc. have recently proposed a deep-learning-based technique that could enable emotion recognition during VR gaming experiences. Their paper was presented at the 2019 IEEE Conference on Virtual Reality and 3-D User Interfaces.

For VR to work, users wear head-mounted displays (HMDs), so that a game's content can be presented directly in front of their eyes. Merging emotion recognition tools with VR gaming experiences has thus proven to be challenging, as most machine learning models for the prediction of emotions work by analyzing people's faces; in VR, a user's face is partially occluded by the HMD.

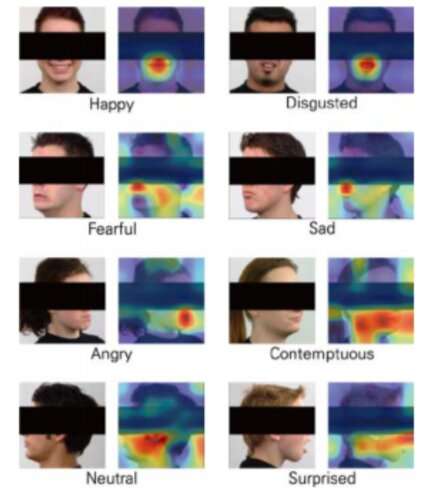

The team of researchers at Yonsei University and Motion Device trained three convolutional neural networks (CNNs)—namely DenseNet, ResNet and Inception-ResNet-V2—to predict people's emotions from partial images of faces. They took images from the Radbound Faces Dataset (RaFD), which includes 8,040 face images of 67 subjects, then edited them by covering the part of the face that would be occluded by the HMD when using VR.

The images used to train the algorithms portray human faces, but the section containing eyes, ears and eyebrows is covered by a black rectangle. When the researchers evaluated their CNNs, they found that they were able to classify emotions even without analyzing these particular features of a person's face, which are thought to be of key importance for emotion recognition.

Overall, the CNN called DenseNet performed better than the others, achieving average accuracies of over 90 percent. Interestingly, however, the ResNet algorithm outperformed the other two in classifying facial expressions that conveyed fear and disgust.

"We successfully trained three CNN architectures that estimate the emotions from the partially covered human face images," the researchers wrote in their paper. "Our study showed the possibility of estimating emotions from images of humans wearing HMDs using machine vision."

The study suggests that in the future, emotion recognition tools could be integrated with VR technology, even if HMDs occlude parts of a gamer's face. In addition, the CNNs the researchers developed could inspire other research teams worldwide to develop new emotion recognition techniques that can be applied to VR gaming.

The researchers are now planning to replace the black rectangles they used in their study with real images of people wearing HDMs. This should ultimately allow them to train the CNNs more reliably and effectively, preparing them for real-life applications.

More information: Hwanmoo Yong et al. Emotion Recognition in Gamers Wearing Head-mounted Display, 2019 IEEE Conference on Virtual Reality and 3D User Interfaces (VR) (2019). DOI: 10.1109/VR.2019.8797736

© 2019 Science X Network