August 23, 2019 feature

A wearable system to assist visually impaired people

New technological advances could have important implications for those affected by disabilities, offering valuable assistance throughout their everyday lives. One key example of this is the guidance that technological tools could provide to the visually impaired (VI), individuals that are either partially or entirely blind.

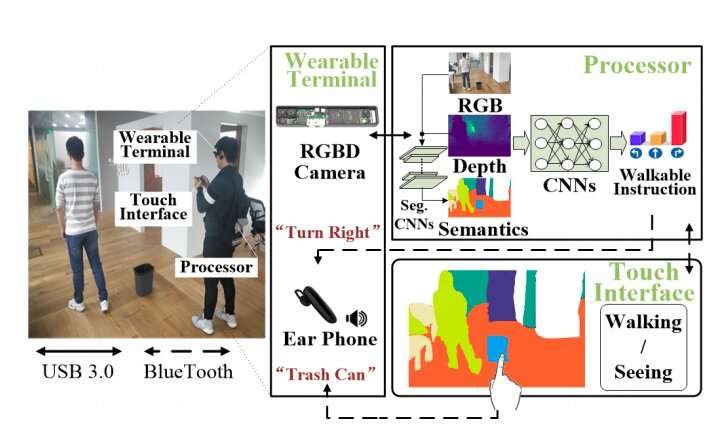

With this in mind, researchers at CloudMinds Technologies Inc., in China, have recently created a new deep learning-powered wearable assistive system for VI individuals. This system, presented in a paper pre-published on arXiv, consists of a wearable terminal, a powerful processor and a smartphone. The wearable terminal has two key components, an RGBD camera and an earphone.

"We present a deep-learning-based wearable system to improve the VI's quality of life," the researchers wrote in their paper. "The system is designed for safe navigation and comprehensive scene perception in real time."

The system developed by the team at CloudMinds essentially collects data from a user's surroundings through the RGBD camera. This data is fed to a convolutional neural network (CNN) that analyses it and predicts the most effective obstacle avoidance and navigation strategies. These strategies, along with other information about the surrounding environment, are then communicated to the user via an earphone.

When building this system, the first researchers developed a data-driven, end-to-end convolutional network (CNN) that can generate collision-free instructions as a user moves forward, left, or and right based on RGBD data and associated semantic maps. In addition, they designed a series of interactions that are easy for VI individuals to adopt, in order to provide them with reliable feedback, such as walking instructions for avoiding obstacles and information about their surrounding environment.

"Our obstacle avoidance engine, which learns from RGBD, semantic map and pilots choice-of-action input, is able to provide safe feedback about the obstacles and free space surrounding the VI. By making use of the semantic map, we also introduce an efficient interaction scheme implemented to help the VI perceive the 3-D environments through a smartphone."

The researchers tested their system's performance in a series of real-world obstacle avoidance experiments. Remarkably, their system outperformed existing approaches in several indoor and outdoor scenarios. The findings they gathered during these tests suggest that the system also enhances users' mobility performance and environment perception capabilities in real-world tasks, for instance, helping them to understand the layout of a given room, assisting them in finding a lost object, or conveying nearby traffic conditions.

As part of their study, the researchers collected datasets of obstacle avoidance episodes that contain both instructions to avoid nearby obstacles while walking and other information for perceiving surrounding 3-D environments. These datasets could help research teams to train other deep-learning-based tools for VI individuals.

In the future, the new wearable system developed in this study could provide more effective and in-depth assistance to VI individuals. The team is now planning to integrate a sonar or bump sensor that would improve the users' safety when they are navigating more challenging or unsafe environments.

More information: Deep learning based wearable assistive system for visually impaired people. arXiv:1908.03364 [cs.RO]. arxiv.org/abs/1908.03364

© 2019 Science X Network