August 23, 2018 report

Applying deep learning to motion capture with DeepLabCut

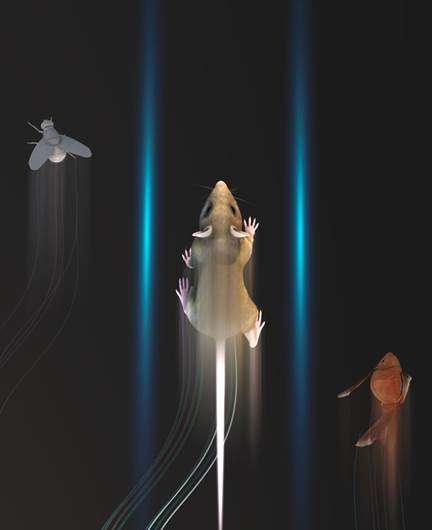

A team of researchers affiliated with several institutions in Germany and the U.S. has developed a deep learning algorithm that can be used for motion capture of animals of any kind. In their paper published in the journal Nature Neuroscience, the group describes their tracking tool called DeepLabCut, how it works and how to use it. Kunlin Wei and Konrad Kording with the University of Peking and the University of Pennsylvania respectively offer a News & Views piece on the work done by the group in the same journal issue.

As Wei and Kording note, scientists have been trying to apply motion capture to humans and animals for well over a century—the idea is to capture the intricacies of all the tiny movements that together make up a larger, more noticeable movement, such as a single dance step. Being able to track such movements in animals offers some clues regarding their biomechanics and how their brains work. Being able to do so with humans can aid in physical therapy efforts or improvements in sports performance. The current process involves video recording the subject and carrying out a laborious process of tagging images frame by frame. In this new effort, the researchers have developed a computer automation technique to carry out the process, making it much faster and easier.

To create DeepLabCut, the group trained a neural network using information from a database called Imagenet that contains a massive number of images and associated metadata. They then developed an algorithm that optimized estimations of poses. The third piece was the software that runs the algorithm, interacts with users and offers output of results. The result is a tool that can be used to perform motion capture on humans and virtually any other creature. All a user has to do is upload samples of what they are after, say, pictures of a squirrel, with its major parts labeled and some videos demonstrating how it moves in general. Then the user uploads video of a subject doing an activity of interest—say, a squirrel cracking open a nut. The software does the rest, producing motion capture of the activity.

The team has made the new tool freely accessible to anyone who wishes to use it for whatever purpose they choose. Wei and Kording suggest the tool could revolutionize motion capture, making it easily available to professionals and novices alike.

More information: Alexander Mathis et al. DeepLabCut: markerless pose estimation of user-defined body parts with deep learning, Nature Neuroscience (2018). DOI: 10.1038/s41593-018-0209-y

Abstract

Quantifying behavior is crucial for many applications in neuroscience. Videography provides easy methods for the observation and recording of animal behavior in diverse settings, yet extracting particular aspects of a behavior for further analysis can be highly time consuming. In motor control studies, humans or other animals are often marked with reflective markers to assist with computer-based tracking, but markers are intrusive, and the number and location of the markers must be determined a priori. Here we present an efficient method for markerless pose estimation based on transfer learning with deep neural networks that achieves excellent results with minimal training data. We demonstrate the versatility of this framework by tracking various body parts in multiple species across a broad collection of behaviors. Remarkably, even when only a small number of frames are labeled (~200), the algorithm achieves excellent tracking performance on test frames that is comparable to human accuracy.

Journal information: Nature Neuroscience

© 2018 Tech Xplore