Novel software can recognize eye contact in everyday situations

Human eye contact is an important information source. However, the ability of computer systems to recognize eye contact in everyday situations is very limited. Computer scientists of Saarland University and the Max Planck Institute for Informatics have now developed a method by which it is possible to detect eye contact, independent of the type and size of the target object, the position of the camera, or the environment.

"Until now, if you were to hang an advertising poster in a pedestrian zone, you could not determine how many people actually looked at it," explains Andreas Bulling at Saarland University and the Max Planck Institute for Informatics. Previously systems attempted to capture this information by measuring gaze direction. This required special eye tracking equipment that needed minutes-long calibration, and subjects had to wear a tracker. Real-world studies, such as in a pedestrian zone, or even just with multiple people, were in the best case very complicated and in the worst case, impossible.

Even using machine learning with a camera placed at the target poster, only glances at the camera itself could be recognized. Too often, the difference between the training data and the data in the target environment was too great. It was prohibitively difficult to develop a universal eye contact detector suitable for both small and large target objects, in stationary and mobile situations, tracking one user or a whole group, or under changing lighting conditions.

Together with researchers Xucong Zhang and Yusuke Sugano, Bulling has developed a method based on a new generation of algorithms for estimating gaze direction. These use a special type of deep learning neural network at the forefront of many areas of industry and business. Bulling and his colleagues have worked on this approach for two years, and have advanced it step by step. In the method they are now presenting, a so-called clustering of the estimated gaze directions is conducted. With this strategy, it is possible to distinguish apples and pears according to various characteristics without explicitly specifying how the two differ.

In a second step, the system identifies the most likely clusters, and the resulting gaze direction estimates are used for the training of a target-object-specific eye contact detector. A decisive advantage of this procedure is that it can be carried out with no involvement from the user, and the method is improved the longer the camera remains next to the target object and records data. "In this way, our method turns normal cameras into eye contact detectors, without the size or position of the target object having to be known or specified in advance," explains Bulling.

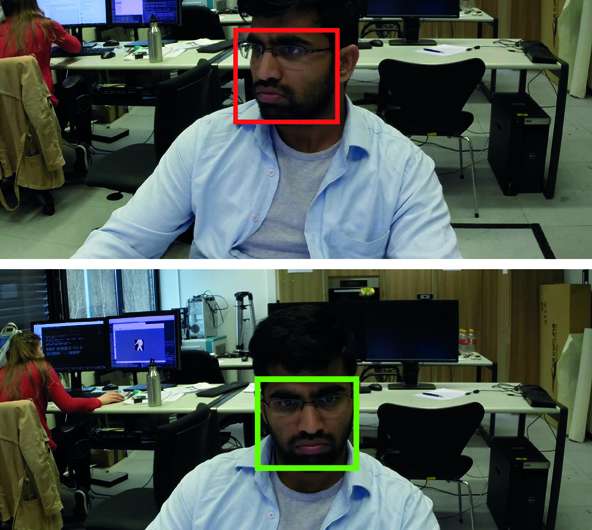

The researchers have tested their method in two scenarios: In a workspace, the camera was mounted on the target object; in an everyday situation, a user wore an on-body camera. Since the method works out the knowledge for itself, it is robust, even with variations in the number of people involved, the lighting conditions, the camera position, and the types and sizes of target objects.

However, Bulling says, "We can, in principle, identify eye contact clusters on multiple target objects with only one camera, but the assignment of these clusters to the various objects is not yet possible. Our method currently assumes that the nearest cluster belongs to the target object, and ignores the other clusters. This limitation is what we will tackle next." Nevertheless, he says, "The method we present is a great step forward. It paves the way not only for new user interfaces that automatically recognize eye contact and react to it, but also for measurements of eye contact in everyday situations, such as outdoor advertising, that were previously impossible."

More information: Xucong Zhang, Yusuke Sugano and Andreas Bulling. Everyday Eye Contact Detection Using Unsupervised Gaze Target Discovery. Proc. ACM UIST 2017. perceptual.mpi-inf.mpg.de/file … /05/zhang17_uist.pdf

Xucong Zhang, Yusuke Sugano, Mario Fritz and Andreas Bulling. Appearance-Based Gaze Estimation in the Wild. Proc. IEEE CVPR 2015, 4511-4520. perceptual.mpi-inf.mpg.de/file … /04/zhang_CVPR15.pdf

Xucong Zhang, Yusuke Sugano, Mario Fritz and Andreas Bulling. It's Written All Over Your Face: Full-Face Appearance-Based Gaze Estimation. Proc. IEEE CVPRW 2017. perceptual.mpi-inf.mpg.de/file … /zhang_cvprw2017.pdf